By John Gruber

npx workos: An AI agent that writes auth directly into your codebase.

- Daring Fireball Weekly Sponsorship Openings ★

-

Weekly sponsorships have been the top source of revenue for Daring Fireball ever since I started selling them back in 2007. They’ve succeeded, I think, because they make everyone happy. They generate good money. There’s only one sponsor per week and the sponsors are always relevant to at least some sizable portion of the DF audience, so you, the reader, are never annoyed and hopefully often intrigued by them. And, from the sponsors’ perspective, they work. My favorite thing about them is how many sponsors return for subsequent weeks after seeing the results.

Sponsorships have been selling briskly, of late. There are only three weeks open between now and the end of June. But one of those open weeks is next week, starting this coming Monday:

- March 9–15 (next week)

- April 20–26

- May 25–31

I’m also booking sponsorships for Q3 2026, and roughly half of those weeks are already sold.

If you’ve got a product or service you think would be of interest to DF’s audience of people obsessed with high quality and good design, get in touch — especially if you can act quick for next week’s opening.

- Google’s Threat Intelligence Group on Coruna a Powerful iOS Exploit Kit of Mysterious Origin ★

-

Google Threat Intelligence Group, earlier this week:

Google Threat Intelligence Group (GTIG) has identified a new and powerful exploit kit targeting Apple iPhone models running iOS version 13.0 (released in September 2019) up to version 17.2.1 (released in December 2023). The exploit kit, named “Coruna” by its developers, contained five full iOS exploit chains and a total of 23 exploits. The core technical value of this exploit kit lies in its comprehensive collection of iOS exploits, with the most advanced ones using non-public exploitation techniques and mitigation bypasses.

The Coruna exploit kit provides another example of how sophisticated capabilities proliferate. Over the course of 2025, GTIG tracked its use in highly targeted operations initially conducted by a customer of a surveillance vendor, then observed its deployment in watering hole attacks targeting Ukrainian users by UNC6353, a suspected Russian espionage group. We then retrieved the complete exploit kit when it was later used in broad-scale campaigns by UNC6691, a financially motivated threat actor operating from China. How this proliferation occurred is unclear, but suggests an active market for “second hand” zero-day exploits. Beyond these identified exploits, multiple threat actors have now acquired advanced exploitation techniques that can be re-used and modified with newly identified vulnerabilities.

- ‘The Window Chrome of Our Discontent’ ★

-

Nick Heer, writing at Pixel Envy, uses Pages (from 2009 through today) to illustrate Apple’s march toward putting “greater focus on your content” by making window chrome, and toolbar icons, more and more invisible:

Perhaps Apple has some user studies that suggest otherwise, but I cannot see how dialling back the lines between interface and document is supposed to be beneficial for the user. It does not, in my use, result in less distraction while I am working in these apps. In fact, it often does the opposite. I do not think the prescription is rolling back to a decade-old design language. However, I think Apple should consider exploring the wealth of variables it can change to differentiate tools within toolbars, and to more clearly delineate window chrome from document.

This entire idea that application window chrome should disappear is madness. Some people — at Apple, quite obviously — think it looks better, in the abstract, but I can’t see how it makes actually using these apps more productive. Artists don’t want to use invisible tools.

Clean lines between content and application chrome are clarifying, not distracting. It’s also useful to be able to tell, at a glance, which application is which. I look at Heer’s screenshot of the new version of Pages running on MacOS 26 Tahoe and not only can I not tell at a glance that it’s Pages, I can’t even tell at a glance that it’s a document word processor, especially with the formatting sidebar hidden. One of the worst aspects of Liquid Glass, across all platforms, but exemplified by MacOS 26, is that all apps look exactly the same. Not just different apps that are in the same category, but different apps from entirely different categories. Safari looks like Mail looks like Pages looks like the Finder — even though web browsers, email clients, word processors, and file browsers aren’t anything alike.

- The Verge Interviews Tim Sweeney After Victory in ‘Epic v. Google’ ★

-

The Verge:

Sean Hollister: What would you say the differences are between the Apple and Google cases?

Tim Sweeney: I would say Apple was ice and Google was fire.

The thing with Apple is all of their antitrust trickery is internal to the company. They use their store, their payments, they force developers to all have the same terms, they force OEMs and carriers to all have the same terms.

Whereas Google, to achieve things with Android, they were going around and paying off game developers, dozens of game developers, to not compete. And they’re paying off dozens of carriers and OEMs to not compete — and when all of these different companies do deals together, lots of people put things in writing, and it’s right there for everybody to read and to see plainly.

I think the Apple case would be no less interesting if we could see all of their internal thoughts and deliberations, but Apple was not putting it in writing, whereas Google was. You know, I think Apple is... it’s a little bit unfortunate that in a lot of ways Apple’s restrictions on competition are absolute. Thou shalt not have a competing store on iOS and thou shalt not use a competing payment method. And I think Apple should be receiving at least as harsh antitrust scrutiny as Google.

Interesting interview, for sure — but it’s from December 2023, when Epic scored its first court victory against Google. And, notably, it came before Sweeney signed away his right to criticize Google or the Play Store.

But I don’t see Epic’s ultimate victory in the lawsuit as a win for Android users, and I don’t think it’s much of a win for Android developers either. These new terms from Google just seem confusing and complicated, with varying rates for “existing installs” vs. “new installs”.

- Tim Sweeney Signed Away His Right to Criticize Google’s Play Store Until 2032 ★

-

Sean Hollister, writing for The Verge:

But Google has finally muzzled Tim Sweeney. It’s right there in a binding term sheet for his settlement with Google.

On March 3rd, he not only signed away Epic’s rights to sue and disparage the company over anything covered in the term sheet — Google’s app distribution practices, its fees, how it treats games and apps — he signed away his right to advocate for any further changes to Google’s app store policies, too. He can’t criticize Google’s app store practices. In fact, he has to praise them.

The contract states that “Epic believes that the Google and Android platform, with the changes in this term sheet, are procompetitive and a model for app store / platform operations, and will make good faith efforts to advocate for the same.” [...]

And while Epic can still be part of the “Coalition for App Fairness,” the organization that Epic quietly and solely funded to be its attack dog against Google and Apple, he can only point that organization at Apple now.

Sounds like a highly credible coalition that truly stands for fairness to me.

- The MacBook Neo’s Price, Looking to the Past and Future ★

-

Ethan W. Anderson, on Twitter/X:

I’ve plotted the most expensive McDonald’s burger and the least expensive MacBook over time. This analysis projects that the most expensive burger will be more expensive than the cheapest laptop as soon as 2081.

Looking to the past, if you plug $599 in today’s money into an inflation calculator, that’s just ~$190 in 1984, the year the original Macintosh launched with a price of $2,495 (which works out to ~$7,800 today.)

- ‘Never the Same Game Twice’ ★

-

John McCoy:

From around 1970 to 1980, the Salem, Massachusetts-based Parker Brothers (now a brand of Hasbro) published games whose innovative and fanciful designs drew inspiration from Pop Art, Op Art, and Madison Avenue advertising. They had boxes, boards, and components that reflected the most current techniques of printing and plastics molding. They were witty, silly, and weird. The other main players in American games at the time were Milton-Bradley, whose art tended towards cartoony, corny, and flat designs, and Ideal, whose games (like Mousetrap) were mostly showcases for their novel plastic components.

Parker Brothers design stood out for its style and sophistication, and even as a young nerd I could see that it was special. In fact, I believe they were my introduction, at the age of seven, to the whole concept of graphic design. This isn’t to say that the games were good in the sense of being fun or engaging to play; a lot of them were re-skinned versions of the basic race-around-the-board type that had been popular since the Uncle Wiggly Game. But they looked amazing and they were different.

These games mostly sucked but they looked cool as shit. Lot of memories for me in this post.

- Another Steve Jobs Quote on Lower-Priced Macs ★

-

Steve Jobs, on Apple’s quarterly results call back in October 2008:

There are some customers which we choose not to serve. We don’t know how to make a $500 computer that’s not a piece of junk, and our DNA will not let us ship that.

Harry McCracken, writing at the time:

With that out of the way, the question that folks have been asking lately about whether Apple will or should release a netbook-like Mac is fascinating. Regardless of whether the company ever does unveil a small, cheap, simple Mac notebook, it’s fun to think about the prospect of one. And I’ve come to the conclusion that such a machine could be in the works, in a manner that’s consistent with the Apple way and the company’s product line as it stands today. I’m not calling this a prediction. But it is a scenario.

Apple made many $500 “computers” in the years between then and now. But they were iPads, not Macs. I think part of the impetus behind the MacBook Neo is an acknowledgement that as popular as iPads are, and for as many people who use them as their primary larger-than-a-phone computing device, there are a lot of other people, and a lot of use cases, that demand a PC. And from Apple, that means a Mac.

Thursday, 5 March 2026

- Steve Jobs in 2007, on Apple’s Pursuit of PC Market Share: ‘We Just Can’t Ship Junk’ ★

-

In August 2007, Apple held a Mac event in the Infinite Loop Town Hall auditorium. New iMacs, iLife ’08 (major updates to iPhoto and iMovie), and iWork ’08 (including the debut of Numbers 1.0). Back then, believe it or not, at the end of these Town Hall events, Apple executives would sit on stools and take questions from the media. For this one, Steve Jobs was flanked by Tim Cook and Phil Schiller. Molly Wood, then at CNet, asked, “And so, I guess once and for all, is it your goal to overtake the PC in market share?”

The audience — along with Cook, Jobs, and Schiller — chuckled. And then Jobs answered. You should watch the video — it’s just two minutes — but here’s what he said:

I can tell you what our goal is. Our goal is to make the best personal computers in the world and to make products we are proud to sell and would recommend to our family and friends. And we want to do that at the lowest prices we can. But I have to tell you, there’s some stuff in our industry that we wouldn’t be proud to ship, that we wouldn’t be proud to recommend to our family and friends. And we can’t do it. We just can’t ship junk.

So there are thresholds that we can’t cross because of who we are. But we want to make the best personal computers in the industry. And we think there’s a very significant slice of the industry that wants that too. And what you’ll find is our products are usually not premium priced. You go and price out our competitors’ products, and you add the features that you have to add to make them useful, and you’ll find in some cases they are more expensive than our products. The difference is we don’t offer stripped-down lousy products. We just don’t offer categories of products like that. But if you move those aside and compare us with our competitors, I think we compare pretty favorably. And a lot of people have been doing that, and saying that now, for the last 18 months.

Steve Jobs would have loved the MacBook Neo. Everything about it, right down to the fact that Apple is responsible for the silicon.

Thoughts and Observations on the MacBook Neo

Wednesday, 4 March 2026

$599. Not a piece of junk.

That’s not a marketing slogan from Apple for the new MacBook Neo. But it could be. And it is the underlying message of the product. For a few years now, Apple has quietly dabbled with the sub-$1,000 laptop market, by selling the base configuration of the M1 MacBook Air — a machine that debuted in November 2020 — at retailers like Walmart for under $700. But dabbling is the right word. Apple has never ventured under the magic $999 price point for a MacBook available in its own stores.

As of today, they’re not just in the sub-$1,000 laptop market, they’re going in hard. The MacBook Neo is a very compelling $600 laptop, and for just $100 more, you get a configuration with Touch ID and double the storage (512 GB instead of 256).

You can argue that all MacBooks should have Touch ID. My first answer to that is “$599”. My second answer is “education”. Touch ID doesn’t really make sense for laptops shared by kids in a school. And with Apple’s $100 education pricing discount, the base MacBook Neo, at $499, is half the price of the base M5 MacBook Air ($1099 retail, $999 education). Half the price.

I’m writing this from Apple’s hands-on “experience” in New York, amongst what I’d estimate as a few hundred members of the media. It’s a pretty big event, and a very big space inside some sort of empty warehouse on the western edge of Chelsea. Before playing the four-minute Neo introduction video (which you should watch — it’s embedded in Apple’s Newsroom post), John Ternus took the stage to address the audience. He emphasized that the Mac user base continues to grow, because “nearly half of Mac buyers are new to the platform”. Ternus didn’t say the following aloud, but Apple clearly knows what has kept a lot of would-be switchers from switching, and it’s the price. The Mac Mini is great, but normal people only buy laptops, and aside from the aforementioned dabbling with the five-year-old M1 MacBook Air and a brief exception when the MacBook Air dropped to $899 in 2014, Apple just hasn’t ventured under $999. “We just can’t ship junk,” Steve Jobs said back in 2007. It’s not that Apple never noticed the demand for laptops in the $500–700 range. It’s that they didn’t see how to make one that wasn’t junk.

Now they have. And the PC world should take note. One of my briefings today included a side-by-side comparison between a MacBook Neo and an HP 14-inch laptop “in the same price category”. It was something like this one, with an Intel Core 5 chip, which costs $550. The HP’s screen sucks (very dim, way lower resolution), the speakers suck, the keyboard sucks, and the trackpad sucks. It’s a thick, heavy, plasticky piece of junk. I didn’t put my nose to it, but I wouldn’t be surprised if it smells bad.

The MacBook Neo looks and feels every bit like a MacBook. Solid aluminum. Good keyboard (no backlighting, but supposedly the same mechanism as in other post-2019 MacBooks — felt great in my quick testing). Good trackpad (no Force Touch — it actually physically clicks, but you can click anywhere, not just the bottom). Good bright display (500 nits max, same as the MacBook Air). Surprisingly good speakers, in a new side-firing configuration. Without even turning either laptop on, you can just see and feel that the MacBook Neo is a vastly superior device.

And when you do turn them on, you see the vast difference in display quality and hear the vast difference in speaker quality. And you get MacOS, not Windows, which, even with Tahoe, remains the quintessential glass of ice water in hell for the computer industry.

I came into today’s event experience expecting a starting price of $799 for the Neo — $300 less than the new $1,099 price for the base M5 MacBook Air (which, in defense of that price, starts with 512 GB storage). $599 is a fucking statement. Apple is coming after this market. I think they’re going to sell a zillion of these things, and “almost half” of new Mac buyers being new to the platform is going to become “more than half”. The MacBook Neo is not a footnote or hobby, or a pricing stunt to get people in the door before upselling them to a MacBook Air. It’s the first major new Mac aimed at the consumer market in the Apple Silicon era. It’s meant to make a dent — perhaps a minuscule dent in the universe, but a big dent in the Mac’s share of the overall PC market.

Miscellaneous Observations

It’s worth noting that the Neo is aptly named. It really is altogether new. In that way it’s the opposite of the five-year-old M1 MacBook Air that Apple had been selling through retailers like Walmart and Amazon. Rather than selling something old for a lower price, they’ve designed and engineered something new from the ground up to launch at a lower price. It’s an all-new trackpad. It’s a good but different display than the Air’s — slightly smaller (13.0 inches vs. 13.6) and supporting only the sRGB color gamut, not P3. If you know the difference between sRGB and P3, the Neo is not the MacBook you want. What Neo buyers are going to notice is that the display looks good and is just as bright as the Air’s — and it looks way better, way sharper, and way brighter than the criminally ugly displays on PC laptops in this price range.

Even the Apple logo on the back of the display lid is different. Rather than make it polished and shiny, it’s simply debossed. Save a few bucks here, a few bucks there, and you eventually grind your way to a new MacBook that deserves the name “MacBook” but starts at just $600.

But of course there are trade-offs. You can use Apple’s Compare page to see the differences between the Neo and Air (and, for kicks, the 2020 M1 Air that until now was still being sold at Walmart). Even better, over at 512 Pixels Stephen Hackett has assembled a concise list of the differences between the MacBook Neo and MacBook Air. All of these things matter, but none of these things are dealbreakers for a $500-700 MacBook. These trade-offs are extremely well-considered on Apple’s part.

I’ll call out one item from Hackett’s 17-item list in particular:

One of the two USB-C ports is limited to USB 2.0 speeds of just 480 Mb/s.

On the one hand, this stinks. It just does. The two ports look exactly the same — and neither is labeled in any way — but they’re different. But on the other hand, the Neo is the first product with an A-series chip that Apple has ever made that supports two USB ports.1 It was, I am reliably informed by Apple product marketing folks, a significant engineering achievement to get a second USB port at all on the MacBook Neo while basing it on the A18 Pro SoC. And while the ports aren’t labeled, if you plug an external display into the “wrong” port, you’ll get an on-screen notification suggesting you plug it into the other port. That this second USB-C port is USB 2.0 is not great, but it is fine.

Other notes:

I think the “fun-ness” of the Neo colors was overstated in the rumor mill. But the “blush” color is definitely pink, “citrus” is definitely yellow, and “indigo” is definitely blue. No confusing any of them with shades of gray.

The keyboards are color-matched. At a glance it’s easy to think the keyboards are all white, but only on the silver Neo are the key caps actually white. The others are all slightly tinted to match the color of the case. Nice!

8 GB of RAM is not a lot, but with Apple Silicon it really is enough for typical consumer productivity apps. (If they update the Neo annually and next year’s model gets the A19 Pro, it will move not to 16 GB of RAM but 12 GB.)

It’s an interesting coincidence that the base models for the Neo and iPhone 17e both cost $600. For $1,200 you can buy a new iPhone and a new MacBook for just $100 more than the price of the base model M5 MacBook Air. (And the iPhone 17e is the one with the faster CPU.)

With the Neo only offered in two configurations — $600 or $700 — and the M5 Air now starting at $1,100, Apple has no MacBooks in the range between $700 and $1,100.

To consider the spread of Apple’s market segmentation, and how the Neo expands it, think about the fact that on the premium side, the 13-inch iPad Pro Magic Keyboard costs $350. That’s a keyboard with a trackpad and a hinge. You can now buy a whole damn 13-inch MacBook Neo — which includes a keyboard, trackpad, and hinge, along with a display and speakers and a whole Macintosh computer — for just $250 more. ★

-

Perhaps the closest Apple had ever come to an A-series-chip product with two ports was the original iPad from 2010, which in late prototypes had two 30-pin connectors — one on the long side and another on the short side — so that you could orient it either way in the original iPad keyboard dock. ↩︎

Wednesday, 4 March 2026

- Studio Display vs. Studio Display XDR ★

-

Not sure if this page was there yesterday, but the main “Displays” page at Apple’s website is a spec-by-spec comparison between the regular and XDR models. Nice.

- Compatibility Notes on the New Studio Displays ★

-

Juli Clover, at MacRumors, notes that neither the new Studio Display nor the Studio Display XDR are compatible with Intel-based Macs. (I’m curious why.) Also, in a separate report, she notes that Macs with any M1 chip, or the base M2 or M3, are only able to drive the Studio Display XDR at 60 Hz. You need a Pro or better M2/M3, or any M4 or M5 chip, to drive it at 120 Hz.

Update: My understanding is that if you connect one of these new Studio Displays to an Intel-based Mac, it’ll work, but it’ll work as a dumb monitor. You won’t get the full features. I’ll bet Apple sooner or later publishes a support document explaining it, but for now, they’re just saying they’re not “compatible” because you don’t get the full feature set. Like with the Studio Display XDR in particular, you won’t get HDR or 120 Hz refresh rates.

- ‘In Other Words, Batman Has Become Superman and Robin Has Become Batman’ ★

-

Jason Snell, Six Colors:

Here’s the backstory: With every new generation of Apple’s Mac-series processors, I’ve gotten the impression from Apple execs that they’ve been a little frustrated with the perception that their “lesser” efficiency cores were weak sauce. I’ve lost count of the number of briefings and conversations I’ve had where they’ve had to go out of their way to point out that, actually, the lesser cores on an M-series chip are quite fast on their own, in addition to being very good at saving power!

Clearly they’ve had enough of that, so they’re changing how those cores are marketed to emphasize their performance, rather than their efficiency.

Tuesday, 3 March 2026

- Apple Announces Updated Studio Display and All-New Studio Display XDR ★

-

Apple Newsroom:

Apple today announced a new family of displays engineered to pair beautifully with Mac and meet the needs of everyone, from everyday users to the world’s top pros. The new Studio Display features a 12MP Center Stage camera, now with improved image quality and support for Desk View; a studio-quality three-microphone array; and an immersive six-speaker sound system with Spatial Audio. It also now includes powerful Thunderbolt 5 connectivity, providing more downstream connectivity for high-speed accessories or daisy-chaining displays. The all-new Studio Display XDR takes the pro display experience to the next level. Its 27-inch 5K Retina XDR display features an advanced mini-LED backlight with over 2,000 local dimming zones, up to 1000 nits of SDR brightness, and 2000 nits of peak HDR brightness, in addition to a wider color gamut, so content jumps off the screen with breathtaking contrast, vibrancy, and accuracy. With its 120Hz refresh rate, Studio Display XDR is even more responsive to content in motion, and Adaptive Sync dynamically adjusts frame rates for content like video playback or graphically intense games. Studio Display XDR offers the same advanced camera and audio system as Studio Display, as well as Thunderbolt 5 connectivity to simplify pro workflow setups. The new Studio Display with a tilt-adjustable stand starts at $1,599, and Studio Display XDR with a tilt- and height-adjustable stand starts at $3,299. Both are available in standard or nano-texture glass options, and can be pre-ordered starting tomorrow, March 4, with availability beginning Wednesday, March 11.

Compared to the first-generation Studio Display (March 2022), the updated model really just has a better camera. (Wouldn’t take much to improve upon the old camera.) The Studio Display XDR is the interesting new one. Apple doesn’t seem to have a “Compare” page for its displays, so the Studio Display Tech Specs and Studio Display XDR Tech Specs pages will have to suffice. Update: The main “Displays” page at Apple’s website serves as a comparison page between the new Studio Display and Studio Display XDR.

The regular Studio Display maxes out at 600 nits, and only supports a refresh rate of 60 Hz. The Studio Display XDR maxes out at 1,000 nits for SDR content and 2,000 nits for HDR, with up to 120 Hz refresh rate. Nice, but not enough to tempt me to upgrade from my current Studio Display with nano-texture, which I never seem to run at maximum brightness. I guess it would be nice to see HDR content, but not nice enough to spend $3,600 to get one with nano-texture. And I don’t think I care about 120 Hz on my Mac?

Unresolved is what this means for the Pro Display XDR, which remains unchanged since its debut in 2019.Update: Whoops, apparently this has been resolved. A small-print note on the Newsroom announcement states:Studio Display XDR replaces Pro Display XDR and starts at $3,299 (U.S.) and $3,199 (U.S.) for education.

- New MacBook Air With M5 ★

-

Apple Newsroom:

MacBook Air now comes standard with double the starting storage at 512GB with faster SSD technology, and is configurable up to 4TB, so customers can keep their most important work on hand. Apple’s N1 wireless chip delivers Wi-Fi 7 and Bluetooth 6 for seamless connectivity on the go. MacBook Air features a beautifully thin, light, and durable aluminum design, stunning Liquid Retina display, 12MP Center Stage camera, up to 18 hours of battery life, an immersive sound system with Spatial Audio, and two Thunderbolt 4 ports with support for up to two external displays.

Base storage went from 256 to 512 GB, but the base price went from the magic $999 to $1,100 ($1,099, technically, which doesn’t make the 99 seem magic). Presumably, those in the market for a $999 MacBook will buy the new about-to-be-announced-tomorrow lower-priced MacBook “Neo”, which I’m guessing will start at $800 ($799), maybe as low as $700 ($699), but will surely have higher-priced configurations for additional storage. Today’s new M5 MacBook Airs have storage upgrades of:

- 1 TB (+ $200)

- 2 TB (+ $600)

- 4 TB (+ $1,200)

Colors remain unchanged (and in my opinion, boring): midnight, starlight, silver, sky blue (almost black, gold-ish gray, gray, blue-ish gray). RAM options remain unchanged too: 16, 24, or 32 GB.

A comparison page showing the new M5 Air, old M4 Air, and base M5 MacBook Pro suggests not much else is new year-over-year, other than the Wi-Fi 7 and Bluetooth 6 support from the N1 chip.

- Apple Might Have Prematurely Leaked the Name ‘MacBook Neo’ ★

-

Joe Rossignol, MacRumors:

A regulatory document for a “MacBook Neo” (Model A3404) has appeared on Apple’s website. Unfortunately, there are no further details or images available yet. While the PDF file does not contain the “MacBook Neo” name, it briefly appeared in a link on Apple’s regulatory website for EU compliance purposes.

My money was on just plain “MacBook”, but I like “MacBook Neo”.

- Apple Introduces MacBook Pro Models With M5 Pro and M5 Max Chips ★

-

Apple Newsroom:

Apple today announced the latest 14- and 16-inch MacBook Pro with the all-new M5 Pro and M5 Max, bringing game-changing performance and AI capabilities to the world’s best pro laptop. With M5 Pro and M5 Max, MacBook Pro features a new CPU with the world’s fastest CPU core, a next-generation GPU with a Neural Accelerator in each core, and higher unified memory bandwidth, altogether delivering up to 4× AI performance compared to the previous generation, and up to 8× AI performance compared to M1 models. This allows developers, researchers, business professionals, and creatives to unlock new AI-enabled workflows right on MacBook Pro. It now comes with up to 2× faster SSD performance and starts at 1TB of storage for M5 Pro and 2TB for M5 Max. The new MacBook Pro includes N1, an Apple-designed wireless networking chip that enables Wi-Fi 7 and Bluetooth 6, bringing improved performance and reliability to wireless connections. It also offers up to 24 hours of battery life; a gorgeous Liquid Retina XDR display with a nano-texture option; a wide array of connectivity, including Thunderbolt 5; a 12MP Center Stage camera; studio-quality mics; an immersive six-speaker sound system; Apple Intelligence features; and the power of macOS Tahoe. The new MacBook Pro comes in space black and silver, and is available to pre-order starting tomorrow, March 4, with availability beginning Wednesday, March 11.

The MacBook Pro Tech Specs page is a good place to start to compare the entire M5 MacBook Pro lineup. One noteworthy change is that last year’s M4 Pro models only supported 24 or 48 GB of RAM; the new M5 Pro models support 24, 48, and 64 GB. Memory configurations for the M5 Max are unchanged from the M4 Max: 36, 48, 64, and 128 GB. (You could get an M4 Pro chip with 64 GB, but only on the Mac Mini.)

Also worth noting — Apple’s RAM pricing remains unchanged, despite the spike in memory prices industry-wide. With the “full” M5 Max chip (18-core CPU, 40-core GPU — there’s a lesser configuration with “only” 32 GPU cores for -$300), base memory is 48 GB. Upgrading to 64 GB costs $200, and upgrading to 128 GB costs $1,000. Same prices as last year. This means the price for a MacBook Pro with 64 GB of RAM — if that’s your main concern — dropped by $800 year over year. Last year you needed to buy one with the high-end M4 Max chip to get 64 GB; now you can configure a MacBook Pro with the M5 Pro with 64 GB. Nice!

Ben Thompson and I wagered a steak dinner on this on Dithering. Ben bet on Apple’s memory prices going up; I bet on them staying the same. My thinking was that this industry-wide spike in RAM prices is exactly why Apple has always charged more for memory — “just in case”. I’m going to enjoy that steak.

- Apple Debuts M5 Pro and M5 Max, and Renames Its M-Series CPU Cores ★

-

Apple Newsroom:

Apple today announced M5 Pro and M5 Max, the world’s most advanced chips for pro laptops, powering the new MacBook Pro. The chips are built using a new Apple-designed Fusion Architecture. This innovative design combines two dies into a single system on a chip (SoC), which includes a powerful CPU, scalable GPU, Media Engine, unified memory controller, Neural Engine, and Thunderbolt 5 capabilities. M5 Pro and M5 Max feature a new 18-core CPU architecture. It includes six of the highest-performing core design, now called super cores, that are the world’s fastest CPU core. Alongside these cores are 12 all-new performance cores, optimized for power-efficient, multithreaded workloads. [...]

The industry-leading super core was first introduced as performance cores in M5, which also adopts the super core name for all M5-based products — MacBook Air, the 14-inch MacBook Pro, iPad Pro, and Apple Vision Pro. This core is the highest-performance core design with the world’s fastest single-threaded performance, driven in part by increased front-end bandwidth, a new cache hierarchy, and enhanced branch prediction.

M5 Pro and M5 Max also introduce an all-new performance core that is optimized to deliver greater power-efficient, multithreaded performance for pro workloads. Together with the super cores, the chips deliver up to 2.5× higher multithreaded performance than M1 Pro and M1 Max. The super cores and performance cores give MacBook Pro a huge performance boost to handle the most CPU-intensive pro workloads, like analyzing complex data or running demanding simulations with unparalleled ease.

This is a bit confusing, but I think — after a media briefing with Apple reps this morning — I’ve got it straight. From the M1 through M4, there were two CPU core types: efficiency and performance. When the regular M5 chip debuted in October, Apple continued using those same names, efficiency and performance, for its two core types. But as of today, they’re renaming them, and introducing a third core type that they’re calling “performance”. They’re reusing the old performance name for an altogether new CPU core type. So you can see what I mean about it being confusing.

There are now three core types in M5-series CPUs. Efficiency cores are still “efficiency”, but they’re only in the base M5. What used to be called “performance” cores are now called “super” cores, and they’re present in all M5 chips. The new core type — more power-efficient than super cores, more performant than efficiency cores — are taking the old name “performance”. Here are the core counts in table form, with separate rows for the 15- and 18-core M5 Pro variants:

Efficiency Performance Super M5 6 — 4 M5 Pro — 10 5 M5 Pro — 12 6 M5 Max — 12 6 Another way to think about it is that there are regular efficiency cores in the plain M5, and new higher-performing efficiency cores called “performance” in the M5 Pro and M5 Max. The problem is that the old M1–M4 names were clear — one CPU core type was fast but optimized for efficiency so they called it “efficiency”, and the other core type was efficient but optimized for performance so they called it “performance”. Now, the new “performance” core types are the optimized-for-efficiency CPU cores in the Pro and Max chips, and despite their name, they’re not the most performant cores.

HazeOver — Mac Utility for Highlighting the Frontmost Window

Monday, 2 March 2026

Back in December I linked to a sort-of stunt project from Tyler Hall called Alan.app — a simple Mac utility that draws a bold rectangle around the current active window. Alan.app lets you set the thickness and color of the frame. I used it for an hour or so before calling it quits. It really does solve the severe (and worsening) problem of being able to instantly identify the active window in recent versions of MacOS, but the crudeness of Alan.app’s implementation makes it one of those cases where the cure is worse than the disease. Ultimately I’d rather suffer from barely distinguishable active window state than look at Alan.app’s crude active-window frame all day every day. What makes Alan.app interesting to me is its effectiveness as a protest app. The absurdity of Alan.app’s crude solution highlights the absurdity of the underlying problem — that anyone would even consider running Alan.app (or the fact that Hall was motivated to create and release it) shows just how bad windowing UI is in recent MacOS versions.

Turns out there exists an app that attempts to solve this problem in an elegant way that you might want to actually live with. It’s called HazeOver, and developer Maxim Ananov first released it a decade ago. It’s in the Mac App Store for $5, is included in the SetApp subscription service, and has a free trial available from the website.

What HazeOver does is highlight the active window by dimming all background windows. That’s it. But it does this simple task with aplomb, and it makes a significant difference in the day-to-day usability of MacOS. Not just MacOS 26 Tahoe — all recent versions of MacOS suffer from a design that makes it difficult to distinguish, instantly, the frontmost (a.k.a. key) window from background windows.1 Making all background windows a little dimmer makes a notable difference.

Longtime DF reader Faisal Jawdat sent me a note suggesting I try HazeOver back in early December, after I linked to Alan.app. I didn’t get around to trying HazeOver until December 30, and I’ve been using it ever since. One thing I did, at first, was not set HazeOver to launch automatically at login. That way, each time I restarted or logged out, I’d go back to the default MacOS 15 Sequoia interface, where background windows aren’t dimmed. I wanted to see if I’d miss HazeOver when it wasn’t running. Each time, I did notice, and I missed it. I now have it set to launch automatically when I log in.

HazeOver’s default settings are a bit strong for my taste. By default, it dims background windows by 35 percent. I’ve dialed that back to just 10 percent, and that’s more than noticeable enough for me. I understand why HazeOver’s default dimming is so strong — it emphasizes just what HazeOver is doing. (Also, some people choose to use HazeOver to avoid being distracted by background window content — in which case you might want to increase, not decrease, the dimming from the default setting.) But after you get used to it, you might find, as I did, that a little bit goes a long way. (Jawdat told me he’s dropped down to 12 percent on his machine.) I’ve also diddled with HazeOver’s animation settings, changing from the default (Ease Out, 0.3 seconds) to Ease In & Out, 0.1 seconds — I want switching windows to feel fast fast fast.

Highly recommended, and a veritable bargain at just $5. ★

-

The HazeOver website also has a link to a beta version with updates specific to MacOS 26 Tahoe. To be clear, the current release version, available in the App Store, works just fine on Tahoe. But the beta version has a Liquid Glass-style Settings window, and addresses an edge case where, on Tahoe, the menu bar sometimes appears too dim. ↩︎

Monday, 2 March 2026

- Unsung Heroes: Flickr’s URLs Scheme ★

-

Marcin Wichary, writing at Unsung (which is just an incredibly good and fun weblog):

Half of my education in URLs as user interface came from Flickr in the late 2000s. Its URLs looked like this:

flickr.com/photos/mwichary/favorites flickr.com/photos/mwichary/sets flickr.com/photos/mwichary/sets/72177720330077904 flickr.com/photos/mwichary/54896695834 flickr.com/photos/mwichary/54896695834/in/set-72177720330077904This was incredible and a breath of fresh air. No redundant

www.in front or awkward.phpat the end. No parameters with their unpleasant?&=syntax. No%signs partying with hex codes. When you shared these URLs with others, you didn’t have to retouch or delete anything. When Chrome’s address bar started autocompleting them, you knew exactly where you were going.This might seem silly. The user interface of URLs? Who types in or edits URLs by hand? But keyboards are still the most efficient entry device. If a place you’re going is where you’ve already been, typing a few letters might get you there much faster than waiting for pages to load, clicking, and so on.

In general, URLs at Daring Fireball try to work like this.

- This post:

/linked/2026/03/02/wichary-flickr-urls - In Markdown:

/linked/2026/03/02/wichary-flickr-urls.text - This day’s posts:

/linked/2026/03/02/ - This month’s posts:

/linked/2026/03/

I say “in general” because the DF URLs could be better. There should be one unified URLs space for all posts on DF, not separate ones for feature articles and Linked List posts. Someday.

Wichary subsequently posted this fine follow-up, chock full of links regarding URL design.

- This post:

- ChangeTheHeaders ★

-

During the most recent episode of The Talk Show, Jason Snell brought up a weird issue that I started running into last year. On my Mac, sometimes I’d drag an image out of a web page in Safari, and I’d get an image in WebP format. Sometimes I wouldn’t care. But usually when I download an image like that, it’s because I want to publish (or merely host my own copy of) that image on Daring Fireball. And I don’t publish WebP images — I prefer PNG and JPEG for compatibility.

What made it weird is when I’d view source on the original webpage, the original image was usually in PNG or JPEG format. If I opened the image in a new tab — just the image — I’d get it in PNG or JPEG format. But when I’d download it by dragging out of the original webpage, I’d get a WebP. This was a total WTF for me.

I turned to my friend Jeff Johnson, author of, among other things, the excellent Safari extension StopTheMadness. Not only was Johnson able to explain what was going on, he actually made a new Safari extension called ChangeTheHeaders that fixed the problem for me. Johnson, announcing ChangeTheHeaders last year:

After some investigation, I discovered that the difference was the Accept HTTP request header, which specifies what types of response the web browser will accept. Safari’s default Accept header for images is this:

Accept: image/webp,image/avif,image/jxl,image/heic,image/heic-sequence,video/*;q=0.8,image/png,image/svg+xml,image/*;q=0.8,*/*;q=0.5Although

image/webpappears first in the list, the order actually doesn’t matter. The quality value, specified by the;q=suffix, determines the ranking of types. The range of values is 0 to 1, with 1 as the default value if none is specified. Thus,image/webpandimage/pnghave equal precedence, equal quality value 1, leaving it up to the web site to decide which image type to serve. In this case, the web site decided to serve a WebP image, despite the fact that the image URL has a.pngsuffix. In a URL, unlike in a file path, the “file extension”, if one exists, is largely meaningless. A very simple web server will directly match a URL with a local file path, but a more complex web server can do almost anything it wants with a URL.This was driving me nuts. Thanks to Johnson, I now understand why it was happening, and I had a simple set-it-and-forget-it tool to fix it. Johnson writes:

What can you do with ChangeTheHeaders? I suspect the biggest selling point will be to spoof the User-Agent. The extension allows you to customize your User-Agent by URL domain. For example, you can make Safari pretend that it’s Chrome on Google web apps that give special treatment to Chrome. You can also customize the Accept-Language header if you don’t like the default language handling of some website, such as YouTube.

Here’s the custom rule I applied a year ago, when I first installed ChangeTheHeaders (screenshot):

Header:

Accept

Value:image/avif,image/jxl,image/heic,image/heic-sequence,video/*;q=0.8,image/png,image/svg+xml,image/*;q=0.8,*/*;q=0.5

URL Domains: «leave blank for all domains»

URL Filter: «leave blank for all URLs»

Resource Types:imageI haven’t seen a single WebP since.

ChangeTheHeaders works everywhere Safari does — Mac, iPhone, iPad, Vision Pro — and you can get it for just $7 on the App Store.

- Welcome (Back) to Macintosh ★

-

Jesper, writing at Take:

My hope is that Macintosh is not just one of these empires that was at the height of its power and then disintegrated because of warring factions, satiated and uncurious rulers, and droughts for which no one was prepared, ruining crops no one realized were essential for survival.

My hope is that there remains a primordial spark, a glimpse of genius, to rediscover, to reconnect to — to serve not annual trends or constant phonification, but the needs of the user to use the computer as a tool to get something done.

- SerpApi Filed Motion to Dismiss Google’s Lawsuit ★

-

Julien Khaleghy, CEO of SerpApi:

Google thinks it owns the internet. That’s the subtext of its lawsuit against SerpApi, the quiet part that it’s suddenly decided to shout out loud. The problem is, no one owns the internet. And the law makes that clear.

In January, we promised that we would fight this lawsuit to protect our business model and the researchers and innovators who depend on our technology. Today, Friday, February 20, 2026, we’re following through with a motion to dismiss Google’s complaint. While this is just one step in what could be a long and costly legal process, I want to explain why we’re confident in our position.

Is Google hurting itself in its confusion? Google is the largest scraper in the world. Google’s entire business began with a web crawler that visited every publicly accessible page on the internet, copied the content, indexed it, and served it back to users. It did this without distinguishing between copyrighted and non-copyrighted material, and it did this without asking permission. Now Google is in federal court claiming that our scraping is illegal.

I’ve come around on SerpApi in the last few months. My initial take was that it surely must be illegal for a company to scrape Google’s search results and offer access to that data as an API. But I’ve come around to the argument that what SerpApi is doing to obtain Google search results is, well, exactly how Google scrapes the rest of the entire web to build its search index. It’s all just scraping publicly accessible web pages.

This December piece by Mike Masnick at Techdirt is what began to change my mind:

Look, SerpApi’s behavior is sketchy. Spoofing user agents, rotating IPs to look like legitimate users, solving CAPTCHAs programmatically — Google’s complaint paints a picture of a company actively working to evade detection. But the legal theory Google is deploying to stop them threatens something far bigger than one shady scraper.

Google’s entire business is built on scraping as much of the web as possible without first asking permission. The fact that they now want to invoke DMCA 1201 — one of the most consistently abused provisions in copyright law — to stop others from scraping them exposes the underlying problem with these licensing-era arguments: they’re attempts to pull up the ladder after you’ve climbed it.

Just from a straight up perception standpoint, it looks bad.

- ‘Anthropic and Alignment’ ★

-

Ben Thompson, writing at Stratechery:

In fact, Amodei already answered the question: if nuclear weapons were developed by a private company, and that private company sought to dictate terms to the U.S. military, the U.S. would absolutely be incentivized to destroy that company. The reason goes back to the question of international law, North Korea, and the rest:

- International law is ultimately a function of power; might makes right.

- There are some categories of capabilities — like nuclear weapons — that are sufficiently powerful to fundamentally affect the U.S.’s freedom of action; we can bomb Iran, but we can’t North Korea.

- To the extent that AI is on the level of nuclear weapons — or beyond — is the extent that Amodei and Anthropic are building a power base that potentially rivals the U.S. military.

Anthropic talks a lot about alignment; this insistence on controlling the U.S. military, however, is fundamentally misaligned with reality. Current AI models are obviously not yet so powerful that they rival the U.S. military; if that is the trajectory, however — and no one has been more vocal in arguing for that trajectory than Amodei — then it seems to me the choice facing the U.S. is actually quite binary:

- Option 1 is that Anthropic accepts a subservient position relative to the U.S. government, and does not seek to retain ultimate decision-making power about how its models are used, instead leaving that to Congress and the President.

- Option 2 is that the U.S. government either destroys Anthropic or removes Amodei.

It’s Congress that is absent in — looks around — all of this. Right down to the name of the Department of Defense. The whole Trump administration has taken to calling it the Department of War, but only Congress can change the legal name. (Anthropic, despite its very public spat with the administration, refers to it as the “Department of War” as well. But serious publications like the Journal and New York Times continue to call it the Department of Defense.)

Nilay Patel, quoting the same section of Thompson’s column I quoted above, sees it as “Ben Thompson making a full-throated case for fascism”. I see it as the case against corporatocracy. Who sets our defense policies? Our democratically elected leaders, or the CEOs of corporate defense contractors?

- WSJ: ‘Trump Administration Shuns Anthropic, Embraces OpenAI in Clash Over Guardrails’ ★

-

Amrith Ramkumar, reporting for The Wall Street Journal (gift link):

Trump’s announcement came shortly before the Pentagon’s Friday afternoon deadline for Anthropic to agree to let the military use its models in all lawful-use cases, a concession the company had refused to make. “We cannot in good conscience accede to their request,” Anthropic Chief Executive Dario Amodei said on Thursday.

The company’s red lines had been domestic mass surveillance and autonomous weapons, areas the Pentagon said Anthropic didn’t need to worry about because the military would never break the law with AI. Defense Department officials said Anthropic needed to fully trust the Pentagon to use the technology responsibly and relinquish control.

OpenAI Chief Executive Sam Altman said the company’s deal with the Defense Department includes those same prohibitions on mass surveillance and autonomous weapons, as well as technical safeguards to make sure the models behave as they should. “We have expressed our strong desire to see things de-escalate away from legal and governmental actions and towards reasonable agreements,” he said, adding that OpenAI asked that all companies be given the chance to accept the same deal. [...]

Shortly after the deadline, Defense Secretary Pete Hegseth said on X that he is designating the company a supply-chain risk, impairing its ability to work with other government contractors.

My short take is that both of these are true:

- It’s not the place of a corporation to dictate terms to the Department of Defense regarding how its product or services are used within the law.

- It’s a preposterous, childish (and almost certainly illegal) overreaction to designate Anthropic a “supply-chain risk to national security” in this way. Grow up.

See also: Anthropic’s official response.

- Seasonal Color Updates to Apple’s iPhone Cases and Apple Watch Bands ★

-

Joe Rossignol, MacRumors:

A seasonal color refresh arrived today for a variety of Apple accessories, including iPhone cases, Apple Watch bands, and the Crossbody Strap. All of the accessories in the latest colors are available to order on Apple.com starting today.

- Apple Introduces New iPad Air With M4 ★

-

Apple Newsroom:

Apple today announced the new iPad Air featuring M4 and more memory, giving users a big jump in performance at the same starting price. With a faster CPU and GPU, iPad Air boosts tasks like editing and gaming, and is a powerful device for AI with a faster Neural Engine, higher memory bandwidth, and 50 percent more unified system memory than the previous generation. With M4, iPad Air is up to 30 percent faster than iPad Air with M3, and up to 2.3× faster than iPad Air with M1. The new iPad Air also features the latest in Apple silicon connectivity chips, N1 and C1X, delivering fast wireless and cellular connections — and support for Wi-Fi 7 — that empower users to work and be creative anywhere. [...]

With the same starting price of just $599 for the 11-inch model and $799 for the 13-inch model, the new iPad Air is an incredible value. And for education, the 11-inch iPad Air starts at $549, and the 13-inch model starts at $749. Customers can pre-order iPad Air starting Wednesday, March 4, with availability beginning Wednesday, March 11.

So much for my theory that Apple would separate its announcements this week with separate days for each product family (e.g. iPhone 17e on Monday, iPads on Tuesday, MacBooks on Wednesday.) Maybe an update to the no-adjective iPad isn’t coming this week?

Aside from the M3 to M4 speed bump, there are very few differences between this generation iPad Air and the last. Same colors even (space gray, blue, purple, and starlight). Here’s a link to Apple’s iPad Compare page, preset to show the current M5 iPad Pro, new M4 iPad Air, and old M3 iPad Air side-by-side.

One interesting tech spec: the new M4 iPad Air models come with 12 GB of RAM, up from 8 GB in last year’s M3 models. With the M5 iPad Pro models, RAM is tied to storage: the 256/512 GB iPad Pros come with 12 GB RAM; the 1/2 TB models come with 16 GB RAM.

- Apple Introduces the iPhone 17e ★

-

Apple Newsroom:

Apple today announced iPhone 17e, a powerful and more affordable addition to the iPhone 17 lineup. At the heart of iPhone 17e is the latest-generation A19, which delivers exceptional performance for everything users do. iPhone 17e also features C1X, the latest-generation cellular modem designed by Apple, which is up to 2× faster than C1 in iPhone 16e. The 48MP Fusion camera captures stunning photos, including next-generation portraits, and 4K Dolby Vision video. It also enables an optical-quality 2× Telephoto — like having two cameras in one. The 6.1-inch Super Retina XDR display features Ceramic Shield 2, offering 3× better scratch resistance than the previous generation and reduced glare. With MagSafe, users can enjoy fast wireless charging and access to a vast ecosystem of accessories like chargers and cases. And when iPhone 17e users are outside of cellular and Wi-Fi coverage, Apple’s groundbreaking satellite features — including Emergency SOS, Roadside Assistance, Messages, and Find My via satellite — help them stay connected when it matters most.

Available in three elegant colors with a premium matte finish — black, white, and a beautiful new soft pink — iPhone 17e will be available for pre-order beginning Wednesday, March 4, with availability starting Wednesday, March 11. iPhone 17e will start at 256GB of storage for $599 — 2× the entry storage from the previous generation at the same starting price, and 4× more than iPhone 12 — giving users more space for high-resolution photos, 4K videos, apps, games, and more.

The main year-over-year changes from the 16e:

- MagSafe, the absence of which felt like the one bit of marketing spite in the 16e.

- An additional color other than black or white.

- SoC goes from A18 to A19, the same chip in the iPhone 17, except the iPhone 17 has 5 GPU cores and the 17e only 4 (same as the 16e). No big whoop.

- Improved camera with next-gen portraits. I found the 16e camera to be surprisingly good.

- Ceramic Shield 2 on the front glass.

- Base storage goes from 128 to 256 GB, while the price remains $600.

- The 512 GB version drops from $900 to $800.

That’s about it. Here’s a preset version of Apple’s iPhone Compare page with the iPhone 17, 17e, and 16e.

Sunday, 1 March 2026

- Sentry ★

-

My thanks to Sentry for sponsoring last week at DF. Sentry is running a hands-on workshop: “Crash Reporting, Tracing, and Logs for iOS in Sentry”. You can watch it on demand. You’ll learn how to connect the dots between slowdowns, crashes, and the user experience in your iOS app. It’ll show you how to:

- Set up Sentry to surface high-priority mobile issues without alert fatigue.

- Use Logs and Breadcrumbs to reconstruct what happened with a crash.

- Find what’s behind a performance bottleneck using Tracing.

- Monitor and reduce the size of your iOS app using Size Analysis.

I know so many developers using Sentry. It’s a terrific product. If you’re a developer and haven’t checked them out, you should.

- The Talk Show: ‘Bad Dates’ ★

-

Jason Snell returns to the show to discuss the 2025 Six Colors Apple Report Card, MacOS 26 Tahoe, Apple Creator Studio, along with what we expect/hope for in next week’s Apple product announcements.

Sponsored by:

- Notion: The AI workspace where teams and AI agents get more done together.

- Squarespace: Save 10% off your first purchase of a website or domain using code talkshow.

- Sentry: A real-time error monitoring and tracing platform. Use code TALKSHOW for $80 in free credits.

Saturday, 28 February 2026

- Trump’s Enormous Gamble on Regime Change in Iran ★

-

Tom Nichols, writing for The Atlantic:

When the 2003 war with Iraq ended, U.S. Ambassador Barbara Bodine said that when American diplomats embarked on reconstruction, they ruefully joked that “there were 500 ways to do it wrong and two or three ways to do it right. And what we didn’t understand is that we were going to go through all 500.”

Friday, 27 February 2026

- West Virginia’s Anti-Apple CSAM Lawsuit Would Help Child Predators Walk Free ★

-

Mike Masnick, writing for Techdirt:

Read that again. If West Virginia wins — if an actual court orders Apple to start scanning iCloud for CSAM — then every image flagged by those mandated scans becomes evidence obtained through a warrantless government search conducted without probable cause. The Fourth Amendment’s exclusionary rule means defense attorneys get to walk into court and demand that evidence be thrown out. And they’ll win that motion. It’s not even a particularly hard case to make.

- How to Block the ‘Upgrade to Tahoe’ Alerts and System Settings Indicator ★

-

Rob Griffiths, writing at The Robservatory:

So I have macOS Tahoe on my laptop, but I’m keeping my desktop Mac on macOS Sequoia for now. Which means I have the joy of seeing things like this wonderful notification on a regular basis. Or I did, until I found a way to block them, at least in 90 day chunks. [...]

The secret? Using device management profiles, which let you enforce policies on Macs in your organization, even if that “organization” is one Mac on your desk. One of the available policies is the ability to block activities related to major macOS updates for up to 90 days at a time (the max the policy allows), which seems like exactly what I needed.

I followed Griffiths’s instructions about a week or so ago, and I’ve been enjoying a no-red-badge System Settings icon ever since. And the Tahoe upgrade doesn’t even show up in General → Software Update. With this profile installed, the confusing interface presented after clicking the “ⓘ” button next to any available update cannot result in your upgrading to 26 Tahoe accidentally.

I waited to link to Griffiths’s post until I saw the pending update from Sequoia 15.7.3 to 15.7.4, just to make sure that was still working. And here it is. My Software Update panels makes it look like Tahoe doesn’t even exist. A delicious glass of ice water, without the visit to hell.

I have one small clarification to Griffiths’s instructions though. He writes:

4/. Optional step: I didn’t want to defer normal updates, just the major OS update, so I changed the Optional (set to your taste) section to look like this:

forceDelayedSoftwareUpdates This way, I’ll still get notifications for updates other than the major OS update, in case Apple releases anything further for macOS Sequoia. Remember to save your changes, then quit the editor.

I was confused by this step, initially, and only edited the first line after

<!-- Optional (set to your taste) -->, to change<true/>to<false/>in the next line. But what Griffiths means, and is necessary to get the behavior I wanted, requires deleting the other two lines in that section of the plist file. I don’t want to defer updates like going from 15.7.3 to 15.7.4.Before editing:

<!-- Optional (set to your taste) --> <key>forceDelayedSoftwareUpdates</key><true/> <key>enforcedSoftwareUpdateMinorOSDeferredInstallDelay</key><integer>30</integer> <key>enforcedSoftwareUpdateNonOSDeferredInstallDelay</key><integer>30</integer>After:

<!-- Optional (set to your taste) --> <key>forceDelayedSoftwareUpdates</key><false/>I’ll bet that’s the behavior most of my fellow MacOS 15 Sequoia holdouts want too.

A Sometimes-Hidden Setting Controls What Happens When You Tap a Call in the iOS 26 Phone App

Friday, 27 February 2026

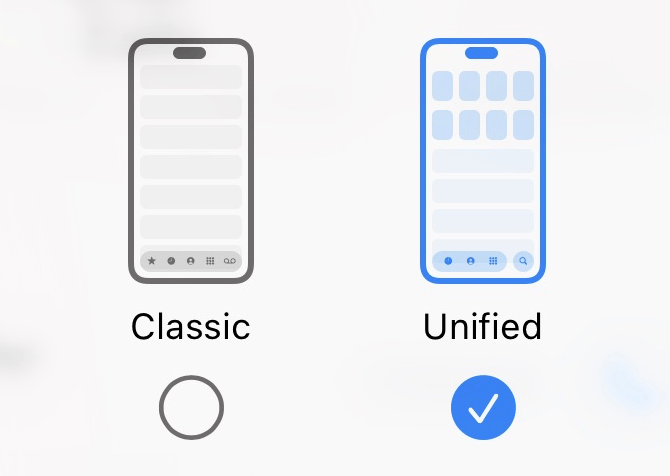

Back in December, Adam Engst wrote this interesting follow-up to his feature story at TidBITS a few weeks prior exploring the differences between the new Unified and old Classic interface modes for the Phone app in iOS 26. It’s also a good follow-up to my month-ago link to Engst’s original feature, as well as a continuation of my recent theme on the fundamentals of good UI design.

The gist of Engst’s follow-up is that one of the big differences between Unified and Classic modes is what happens when you tap on a row in the list of recent calls. In Classic, tapping on a row in the list will initiate a new phone call to that number. There’s a small “ⓘ” button on the right side of each row that you can tap to show the contact info for that caller. That’s the way the Phone app has always worked. In the new iOS 26 Unified mode, this behavior is reversed: tapping on the row shows the contact info for that caller, and you need to tap a small button with a phone icon on the right side of the row to immediately initiate a call.

Engst really likes this aspect of the Unified view, because the old behavior made it too easy to initiate a call accidentally, just by tapping on a row in the list. I’ve made many of those accidental calls the same way, and so I prefer the new Unified behavior for the same reason. Classic’s tap-almost-anywhere-in-the-row-to-start-a-call behavior is a vestige of some decisions with the original iPhone that haven’t held up over the intervening 20 years. With the original iPhone, Apple was still stuck — correctly, probably! — in the mindset that the iPhone was first and foremost a cellular telephone, and initiating phone calls should be a primary one-tap action. No one thinks of the iPhone as primarily a telephone these days, and it just isn’t iOS-y to have an action initiate just by tapping anywhere in a row in a scrolling list. You don’t tap on an email message to reply to it. You tap a Reply button. Inadvertent phone calls are particularly pernicious in this regard because the recipient is interrupted too — it’s not just an inconvenience to you, it’s an interruption to someone else, and thus also an embarrassment to you.

Here’s where it gets weird.

There’s a preference setting in Settings → Apps → Phone for “Tap Recents to Call”. If you turn this option on, you then get the “tap anywhere in the row to call the person” behavior while using the new Unified view. But this option only appears in the Settings app when you’re using Unified view in the Phone app. If you switch to the Classic view in the Phone app, this option just completely disappears from the Settings app. It’s not grayed out. It’s just gone. Go read Engst’s article describing this, if you haven’t already — he has screenshots illustrating the sometimes-hidden state of this setting.

I’ll wait.

Engst and I discussed this at length during his appearance on The Talk Show earlier this week. Especially after talking it through with him on the show, I think I understand both what Apple was thinking, and also why their solution feels so wrong.

At first, I thought the solution was just to keep this option available all the time, whether you’re using Classic or Unified as your layout in the Phone app. Why not let users who prefer the Classic layout turn off the old “tap anywhere in the row to call the person” behavior? But on further thought, there’s a problem with this. If you just want your Phone app to keep working the way it always had, you want Classic to default to the old tap-in-row behavior too. What Apple wants to promote to users is both a new layout and a new tap-in-row behavior. So when you switch to Unified in the Phone app, Apple wants you to experience the new tap-in-row behavior too, where you need to specifically tap the small phone-icon button in the row to call the person, and tapping anywhere else in the row opens a contact details view.

There’s a conflict here. You can’t have the two views default to different row-tapping behavior if one single switch applies to both views.

Apple’s solution to this dilemma — to show the “Tap Recents to Call” in Settings if, and only if, Unified is the current view option in the Phone app — is lazy. And as a result, it’s quite confusing. No one expects an option like this to only appear sometimes in Settings. You pretty much need to understand everything I’ve written about in this article to understand why and when this option is visible. Which means almost no one who uses an iPhone is ever going to understand it. No one expects a toggle in one app (Phone) to control the visibility of a switch in another app (Settings).

My best take at a proper solution to this problem would be for the choice between Classic and Unified views to be mirrored in Settings → Apps → Phone. Show this same bit of UI, that currently is only available in the Filter menu in the Phone app, in both the Phone app and in Settings → Apps → Phone:

If you change it in one place, the change should be reflected, immediately, in the other. It’s fine to have the same setting available both in-app and inside the Settings app.

Then, in the Settings app, the “Tap Recents to Call” option could appear underneath the Classic/Unified switcher only when “Unified” is selected. Switch from Classic to Unified and the “Tap Recents to Call” switch would appear underneath. Switch from Unified to Classic and it would disappear. (Or instead of disappearing, it could gray out to indicate the option isn’t available when Classic is selected.) The descriptive text describing the option could even state that it’s an option only available with Unified.1

The confusion would be eliminated if the Classic/Unified toggle were mirrored in Settings. That would make it clear why “Tap Recents to Call” only appears when you’re using Unified — because your choice to use Unified (or Classic) would be right there. ★

-

Or, Apple could offer separate “Tap Recents to Call” options for both Classic and Unified. With Classic, it would default to On (the default behavior since 2007), and with Unified, default to Off (the idiomatically correct behavior for modern iOS). In that case, the descriptive text for the option would *need* to explain that it’s a separate setting for each layout, or perhaps the toggle labels could be “Tap Recents to Call in Classic” and “Tap Recents to Call in Unified”. But somehow it would need to be made clear that they’re separate switches. But this is already getting more complicated. I think it’d be simpler to just keep the classic tap-in-row behavior with the Classic layout, and offer this setting only when using the Unified view. ↩︎

Friday, 27 February 2026

- TUDUMB ★

-

MG Siegler, writing at Spyglass:

Of course, Netflix could have absorbed such a cost. It’s a $400B company (well, before this deal, anyway) — double Disney! Paramount Skydance? They’re worth $11B. Yes, they’re paying almost exactly $100B more than they’re worth for WBD. Yes, it’s looney. But really, it’s leverage.

To be clear, Netflix was going to pay for the deal with debt too, but they have a clear path to repay such debts. They have a great, growing business. They don’t require the backstop of one of the world’s richest men, who just so happens to be the father of the CEO. How on Earth is Paramount going to pay down this debt? I’m tempted to turn to another bit of Paramount IP for the answer:

- Step one

- Step two

- ????

- PROFIT!!!

- Block Lays Off 4,000 (of 10,000) Employees ★

-

CNBC:

Block said Thursday it’s laying off more than 4,000 employees, or about half of its head count. The stock skyrocketed as much as 24% in extended trading.

“Today we shared a difficult decision with our team,” Jack Dorsey, Block’s co-founder and CEO, wrote in a letter to shareholders. “We’re reducing Block by nearly half, from over 10,000 people to just under 6,000, which means that over 4,000 people are being asked to leave or entering into consultation.” [...]

Other companies like Pinterest, CrowdStrike and Chegg have recently announced job cuts and directly attributed the layoffs to AI reshaping their workforces.

In an X post, Dorsey said he was faced with the choice of laying off staffers over several months or years “as this shift plays out,” or to “act on it now.”

Dorsey’s letter to shareholders was properly upper-and-lowercased; his memo to employees, which he posted on Twitter/X, was entirely lowercase. That’s a telling sign about who he respects. Dorsey, in that memo to employees:

we’re not making this decision because we’re in trouble. our business is strong. gross profit continues to grow, we continue to serve more and more customers, and profitability is improving. but something has changed. we’re already seeing that the intelligence tools we’re creating and using, paired with smaller and flatter teams, are enabling a new way of working which fundamentally changes what it means to build and run a company. and that’s accelerating rapidly.

i had two options: cut gradually over months or years as this shift plays out, or be honest about where we are and act on it now. i chose the latter. repeated rounds of cuts are destructive to morale, to focus, and to the trust that customers and shareholders place in our ability to lead. i’d rather take a hard, clear action now and build from a position we believe in than manage a slow reduction of people toward the same outcome.

AI is going to obviate a lot of jobs, in a lot of industries. So it goes. But in the case of these tech companies — exemplified by Block — it’s just a convenient cover story to excuse absurd over-hiring in the last 5–10 years. Say what you want about Elon Musk, but he was absolutely correct that Twitter was carrying a ton of needless employees. This reckoning was coming, and “AI” is just a convenient scapegoat.

Thursday, 26 February 2026

- Apple Announces F1 Broadcast Details, and a Surprising Netflix Partnership ★

-

Jason Snell, writing at Six Colors:

Perhaps the most surprising announcement on Thursday was that Apple and Netflix, which have had a rather stand-offish relationship when it comes to video programming, have struck a deal to swap some Formula One-related content. Formula One’s growing popularity in the United States is due, perhaps in large part, to the high-profile success of the Netflix docuseries “Drive to Survive.” The latest season of that series, debuting Friday, will premiere simultaneously on both Netflix and Apple TV. Presumably, in exchange for that non-exclusive, Apple will also non-exclusively allow Netflix to broadcast the Canadian Grand Prix in May. (Insert obligatory wish that Apple and Netflix would bury the hatchet and enable Watch Now support in the TV app for Netflix content.)

What a crazy cool partnership.

- Energym ★

-

“An interview from 2036 with Elon Musk, Jeff Bezos, and Sam Altman.” This is what AI video generation was meant for.

- Netflix Backs Out of Bid for Warner Bros., Paving Way for Paramount Takeover ★

-

The New York Times:

Netflix said on Thursday that it had backed away from its deal to acquire Warner Bros. Discovery, a stunning development that paves the way for the storied Hollywood media giant to end up under the control of a rival bidder, the technology heir David Ellison.

Netflix said that it would not raise its offer to counter a higher bid made earlier this week by Mr. Ellison’s company, Paramount Skydance, adding in a statement that “the deal is no longer financially attractive.”

“This transaction was always a ‘nice to have’ at the right price, not a ‘must have’ at any price,” the Netflix co-chief executives, Ted Sarandos and Greg Peters, said in a statement.

Netflix’s stock is up 9 percent in after-hours trading. This is like when you have a friend (Netflix) dating a good-looking-but-crazy person (Warner Bros.), and the good-looking-but-crazy person does something to give your friend second thoughts. You tell your friend to run away.

- iPhone and iPad Approved to Handle Classified NATO Information ★

-

Apple Newsroom:

Today, Apple announced iPhone and iPad are the first and only consumer devices in compliance with the information assurance requirements of NATO nations. This enables iPhone and iPad to be used with classified information up to the NATO restricted level without requiring special software or settings — a level of government certification no other consumer mobile device has met.

That’s nice, but the iPhone is only the second phone to be approved for handling classified information for the Board of Peace. The first, of course, was the T1.

- ‘Steve Jobs in Exile’ ★

-

New book, shipping May 19, from author Geoffrey Cain:

For twelve years, from 1985 to 1997, Jobs wandered the business wilderness with his new venture, NeXT. It was a period of spectacular failures, near-bankruptcy, and brutal humiliation. But out of this crucible of defeat emerged the visionary leader who would go on to create the iPod, iPhone, and iPad, transforming Apple into the most valuable company on earth.

Drawing on previously unpublished materials and new interviews with the key players, Geoffrey Cain reveals the untold story of Steve Jobs’s “lost decade” — the formative years that shaped the icon we thought we knew.

Afterword by Ed Catmull, who was obviously intimately familiar with Jobs in that era. And via Cain’s post on LinkedIn announcing the book, the foreword is by NeXT cofounder Dan’l Lewin.

- Microsoft Adds Additional Markdown Features to Windows Notepad ★

-

Still feels a bit ridiculous to me that Markdown is now an editing mode in Notepad.

- Prediction ‘Market’ Kalshi Accuses MrBeast Editor of Insider Trading ★

-

Bobby Allyn, reporting for NPR:

An editor who works for YouTube’s biggest creator, MrBeast, has been suspended from the prediction market platform Kalshi and reported to federal regulators for insider trading, Kalshi officials said on Wednesday. It’s the first time the company has publicly revealed the results of an investigation into market manipulation on the popular app.

The MrBeast employee, who Kalshi identified as Artem Kaptur in regulatory filings, traded around $4,000 on markets related to the streamer, the company said. Kalshi investigators discovered that Kaptur had “near-perfect trading success” on bets about the YouTuber’s videos with low odds, making the wagers appear suspicious, according to company officials.

Call these things what they are — prediction casinos, not prediction markets — and the problems come into focus.

My 2025 Apple Report Card

Wednesday, 25 February 2026

This week Jason Snell published his annual Six Colors Apple Report Card for 2025. As I’ve done in the past — for the report-card years 2024, 2023, 2022, 2021, 2020, 2019, 2018 — I’m publishing my full remarks and grades here. On Snell’s report card, voters give per-category scores ranging from 5 to 1; I’ve translated these to letter grades, A to F, which is how I consider them. (See footnote 1 from last year’s report if you’re curious why it’s not A to E.)

As I noted last year, “Siri/Apple Intelligence” is not a standalone category on the report card. I know Snell is very much trying to keep the number of different categories from inflating, but AI has been the biggest thing in tech for several years running. If it were a standalone category, last year I said I’d have given Apple a D for 2024. This year, I’d have given them an F — an utter, very public failure. (Their AI efforts in 2025 did end on a mildly optimistic note — they cleaned house.)

Mac: C (last year: A)

If there were separate categories for Mac hardware and MacOS, I’d give the hardware an A and MacOS 26 Tahoe a D. The hardware continues to be great — fast, solid, reliable — and Apple Silicon continues to improve year-over-year with such predictability that Apple is making something very difficult look like it must be easy.

Tahoe, though, is the worst regression in the entire history of MacOS. There are many reasons to prefer MacOS to any of its competition — Windows or Linux — but the one that has been the most consistent since System 1 in 1984 is the superiority of its user interface. There is nothing about Tahoe’s new UI — the Mac’s implementation of the Liquid Glass concept Apple has applied across all its OSes — that is better than its predecessor, MacOS 15 Sequoia. Nothing. And there is much that is worse. Some of it much worse. Fundamental principles of human-computer interaction — principles that Apple itself forged over decades — have been completely ignored. And a lot of it just looks sloppy and amateur. Simple things like resizing windows, and having application icons that look like they were designed by talented artists.

iPhone: A (last year: A)

iPhone 17 Pro and Pro Max are, technically, the best iPhones Apple has ever made. They’re very well designed too. The change to make the camera plateau span the entire width of the phone is a good one. It looks better, allows a naked iPhone 17 Pro to sit more steadily on a flat surface, and lets one in a case sit on a surface without any wobble at all. Apple even finally added a really fun, bold color — “cosmic orange” — that, surprising no one, seems to be incredibly popular with customers.